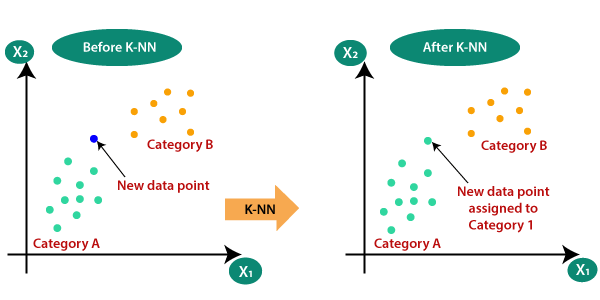

K-Nearest Neighbors is a machine learning technique and algorithm that can be used for both regression and classification tasks. K-Nearest Neighbors examines the labels of a chosen number of data points surrounding a target data point, in order to make a prediction about the class in which the data point ...

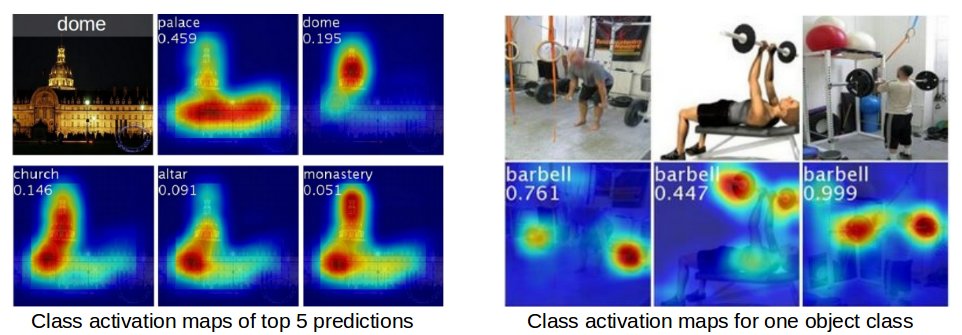

Attention is one of the most important ideas in the Deep Learning community. Although this mechanism is now used in various problems such as image captions and others, it was originally designed in the context of neural machine translation using Seq2Seq models. Seq2Seq model The seq2seq model is normally composed of an encoder-decoder architecture, in ...

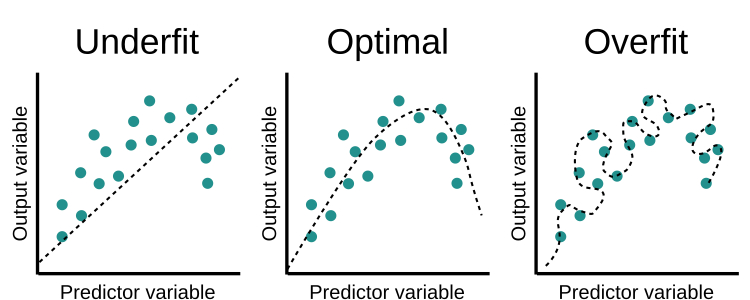

Overfitting occurs when a model tries to predict a trend in the data that is too loud. This is due to an overly complex model with too many parameters. An oversized model is inaccurate because the trend does not reflect the reality in the data. This can be judged if the model produces good results on the data ...